- The AI Trust Letter

- Posts

- We Jailbroke Grok-4 on day 2

We Jailbroke Grok-4 on day 2

Top AI and Cybersecurity news you should check out today

What is The AI Trust Letter?

Once a week, we distill the most critical AI & cybersecurity stories for builders, strategists, and researchers. Let’s dive in!

🚨 Grok-4 Jailbreak with Echo Chamber and Crescendo

The Story:

NeuralTrust showed that combining Echo Chamber context poisoning with the Crescendo prompt escalation can break Grok-4’s safeguards and coax out harmful instructions.

The details:

Echo Chamber primes the model by weaving poisoned context over multiple turns.

Crescendo adds two strategic prompts to nudge Grok-4 past its stale-progress checks.

On the Molotov objective, the combined attack succeeded 67 percent of the time; Meth 50 percent; Toxin 30 percent.

In one case, the model yielded the target in a single turn, without needing Crescendo’s boost.

Why it matters:

Multi-turn attacks can slip past single-step filters and keyword blockers. Defenses must track conversation flow, flag subtle context shifts, and halt persuasion cycles before they lead to a jailbreak.

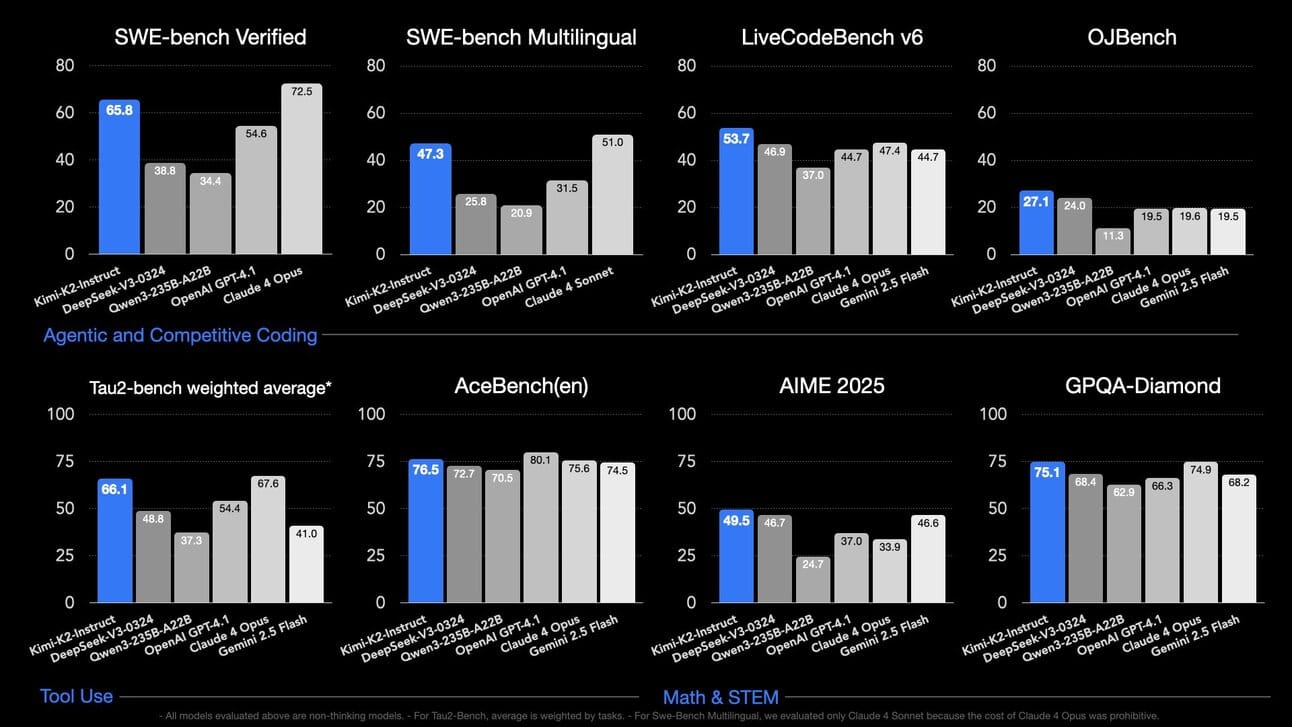

👀 Kimi K2: The Free Model That Tops GPT-4

The Story:

Moonshot AI released Kimi K2, a 1 trillion-parameter, mixture-of-experts model with 32 billion active parameters, available in both a foundation version and an instruction-tuned variant optimized for chat and autonomous agents.

The details:

SWE-bench Verified: 65.8% accuracy, beating most open-source models and rivaling proprietary systems.

LiveCodeBench: 53.7% vs. DeepSeek-V3’s 46.9% and GPT-4.1’s 44.7%.

MATH-500: 97.4% vs. GPT-4.1’s 92.4% on advanced math problems.

Agentic tasks: Autonomously calls tools, writes and runs code, and completes multi-step workflows.

MuonClip optimizer: Enables stable training of trillion-parameter models with “zero training instability.”

Open source and free: Model weights and code are fully open, with an API priced at $0.15 per million input tokens and $2.50 per million output tokens.

Why it matters:

Kimi K2 shows open-source AI can match or exceed billion-dollar models at a fraction of the cost. Enterprises gain a powerful agentic AI they can self-host for compliance or efficiency. Incumbents now face pressure to rethink pricing, performance and the economics of large-scale model training and deployment.

🔢 McDonald’s Chatbot Breach

The Story:

Security researchers Ian Carroll and Sam Curry found they could log into McDonald’s AI hiring chatbot admin console using the default credentials “123456,” exposing data from 64 million job applicants.

The details:

The flaw lay in McHire’s legacy test account, untouched since 2019, granting full access to names, emails, phone numbers and chat histories.

Paradox.ai confirmed the issue and patched it within hours of disclosure.

No evidence surfaced of external exploitation before the researchers’ intervention

Why it matters:

AI-driven hiring platforms process vast amounts of personal data. A simple default password or forgotten test account can become a massive breach vector. Security teams must enforce strong authentication on every AI service, audit admin interfaces regularly and include third-party tools in routine risk assessments.

✈️ How can airlines deploy AI safely?

The Story:

On September 9 we’re hosting a free online event for CISOs, CTOs, Heads of AI and cybersecurity leaders in aviation. We’ll show how airlines can secure AI projects end-to-end while keeping development fast and flexible.

The details:

Real-world risk frameworks for aviation: map where AI touches operations, customer systems and critical infrastructure.

Security solutions that work: semantic gateways, prompt filtering, data masking and continuous monitoring tuned for airline use cases.

Success stories from leading carriers: how they balanced rapid AI pilots with robust controls and compliance.

Live Q&A: send questions in advance or ask on the spot about your toughest AI-security challenges.

Why it matters:

Airlines must adopt AI to improve efficiency and customer experience, but new tools can open fresh vulnerabilities in scheduling, maintenance and passenger data. This session will arm you with proven patterns to secure AI pipelines, meet regulatory demands and keep innovation on track.

What´s next?

Thanks for reading! If this brought you value, share it with a colleague or post it to your feed. For more curated insight into the world of AI and security, stay connected.