- The AI Trust Letter

- Posts

- Targeted Ads Arrive to ChatGPT

Targeted Ads Arrive to ChatGPT

Top AI and Cybersecurity news you should check out today

Welcome Back to The AI Trust Letter

Once a week, we distill the most critical AI & cybersecurity stories for builders, strategists, and researchers. Let’s dive in!

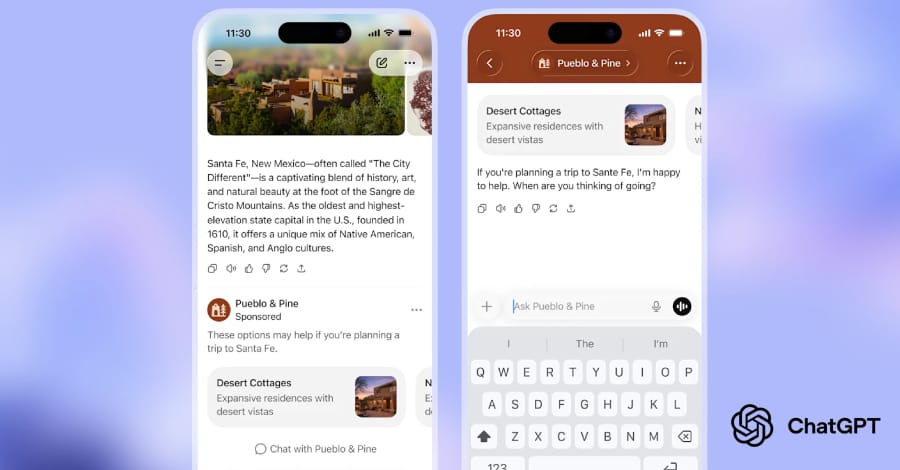

📲 OpenAI to Show Ads in ChatGPT for Free Users

The Story:

OpenAI announced that it will begin showing advertising to users on the free tier of ChatGPT. The change is intended to help fund continued free access while preserving paid options without ads.

The details:

Starting in 2026, free ChatGPT accounts will see sponsored content in some app interfaces.

Advertisements will be labeled clearly, and OpenAI says they will not alter core model responses.

The company plans to offer controls to limit ad frequency and relevance settings.

Paid subscriptions will remain ad-free, with additional features and higher usage limits.

OpenAI says the goal is to balance user experience with sustainable business support for free users.

Why it matters:

Introducing ads into AI assistants changes the economics of consumer access. For organizations and developers relying on free tiers for experimentation, this may affect cost forecasts and usage patterns. It also raises broader questions about how ad funding intersects with content neutrality and response quality in AI systems.

🤖 Grok AI Must Block Misuse After Safety Backlash

The Story:

The California Attorney General issued a cease-and-desist order to XAI, the company behind Grok, after finding that users were creating and sharing sexually explicit deepfake images of private individuals using the platform. The order demands immediate action to prevent further misuse.

The details:

The AG’s office says complaints came from people whose likenesses were used without their consent in explicit content generated via Grok’s image tools.

Authorities allege that the platform failed to implement adequate safeguards to stop harmful deepfake creation.

The order requires XAI to block the generation and distribution of sexually explicit deepfake images and provide a compliance plan within 30 days.

It also directs the company to respond to requests from victims to remove content and take steps to prevent recurrence.

XAI has not publicly detailed how it will meet the demands, but the action highlights rising regulatory scrutiny around generative image misuse.

Why it matters:

This marks one of the first times a state regulator has taken formal enforcement action against an AI provider over deepfake content targeting private individuals. For teams deploying generative systems that handle images or bills of rights, it underscores the need for robust misuse prevention, consent mechanisms, and rapid takedown processes as part of responsible product design.

💡 California’s AI Laws: What Changes in January 2026

The Story:

California is moving fast on AI regulation. A growing set of state laws is starting to define what “responsible AI” actually means in practice, especially around transparency, consumer rights, and harmful or deceptive uses of AI.

The details:

California is not treating AI as a single category. New laws cover different risks, from deepfakes and election content to automated decision-making and consumer disclosures.

A major theme is transparency. Companies may be expected to clearly disclose when users are interacting with AI or when content has been generated or manipulated.

Deepfakes are a central focus. Rules increasingly target non-consensual sexual content, impersonation, and misleading synthetic media.

Some laws raise the bar for documentation and accountability, pushing teams to prove what safeguards exist, not just claim they do.

For companies operating nationally, California often becomes the de facto baseline, since it is hard to maintain different policies state by state.

Why it matters:

For most AI teams, the risk is not that regulation will “stop innovation.” It’s that compliance will show up late in the product cycle, when the system is already deployed and hard to change. California’s direction makes it clear that guardrails, disclosure, and traceability are becoming product requirements.

If you build AI systems that generate content, influence decisions, or interact with users at scale, it’s worth treating these laws as a preview of what broader regulation may look like.

🚨 Single-Click Data Exfiltration From Microsoft Copilot

The Story:

Security researchers have identified a new form of attack against AI systems they call a reprompt attack. This technique manipulates a model’s internal prompt history to force unintended behavior in chained or multi-turn conversations.

The details:

In a reprompt attack, an adversary subtly inserts new instructions into the context that the model carries across turns.

Unlike traditional prompt injection, which directly alters the user’s visible prompt, reprompt attacks work deeper in the session history, influencing future outputs without obvious flags.

The researchers demonstrated that even models with standard safety filters can be steered this way if the internal context is not reset or scrubbed properly between steps.

They showed how reprompting could leak restricted content, change output style, or bypass simple rule checks later in a session.

Mitigations include clearing sensitive context between workflows, strict segmentation of multi-turn sessions, and stronger guardrails around how agents carry state forward.

Why it matters:

As AI systems become more interactive and stateful, the assumptions about how context is managed matter more. This attack highlights a category of risk that isn’t just about malicious language in a single prompt, but about how the history of interaction can be weaponized. For teams building multi-turn workflows or autonomous agents, protecting context and controlling session state is now an essential part of defense.

🖥️ Shadow AI Shows Up as a Compliance Front Line

The Story:

A new report highlights shadow AI (unsanctioned use of generative tools by employees) as a growing compliance and risk issue. Organizations are discovering that AI is being used outside official channels, creating gaps in control and visibility.

The details:

Many employees use external AI platforms for work tasks without IT or risk team approval.

Shadow AI use often involves uploading sensitive corporate data into third-party models, bypassing data governance controls.

This behavior is driven by speed and convenience, not malicious intent, but it exposes firms to legal and compliance risks.

Risk teams report that monitoring and discovering these tools in use is difficult, as they may run in personal accounts or unmonitored cloud apps.

The report urges better integration between compliance programs and AI enablement efforts to track usage and enforce policies.

Why it matters:

Shadow AI is not just a productivity issue, it’s a blind spot in risk and compliance programs. When employees feed proprietary data into unvetted models, organizations lose control over confidentiality, audit trails, and regulatory adherence. To manage this, companies will need formal discovery processes, clear usage policies, and continuous monitoring that spans sanctioned and unsanctioned AI activity.

What´s next?

Thanks for reading! If this brought you value, share it with a colleague or post it to your feed. For more curated insight into the world of AI and security, stay connected.