- The AI Trust Letter

- Posts

- Open AI launches ChatGPT Agent

Open AI launches ChatGPT Agent

Top AI and Cybersecurity news you should check out today

What is The AI Trust Letter?

Once a week, we distill the most critical AI & cybersecurity stories for builders, strategists, and researchers. Let’s dive in!

🛠️ OpenAI Launches General-Purpose Agent in ChatGPT

The Story:

OpenAI debuted the ChatGPT Agent, a built-in assistant that can automatically handle a range of computer-based tasks, from managing your calendar to building slide decks and running code directly inside ChatGPT.

The details:

Unified workflow: The new agent merges web browsing, deep research, code execution and document editing into one virtual workspace.

Task automation: Ask it to review upcoming meetings, draft presentations, analyze data sets and output editable slides or spreadsheets.

User control: All actions run in a secure sandbox and require your approval before accessing accounts or sending emails.

Rollout: The feature is available now to ChatGPT Pro, Plus and Team subscribers.

Why it matters:

This marks a shift from simple Q&A to AI-driven automation in daily workflows. Security teams should review sandbox safeguards, permission prompts and audit trails to ensure these agents can’t overreach or expose sensitive data.

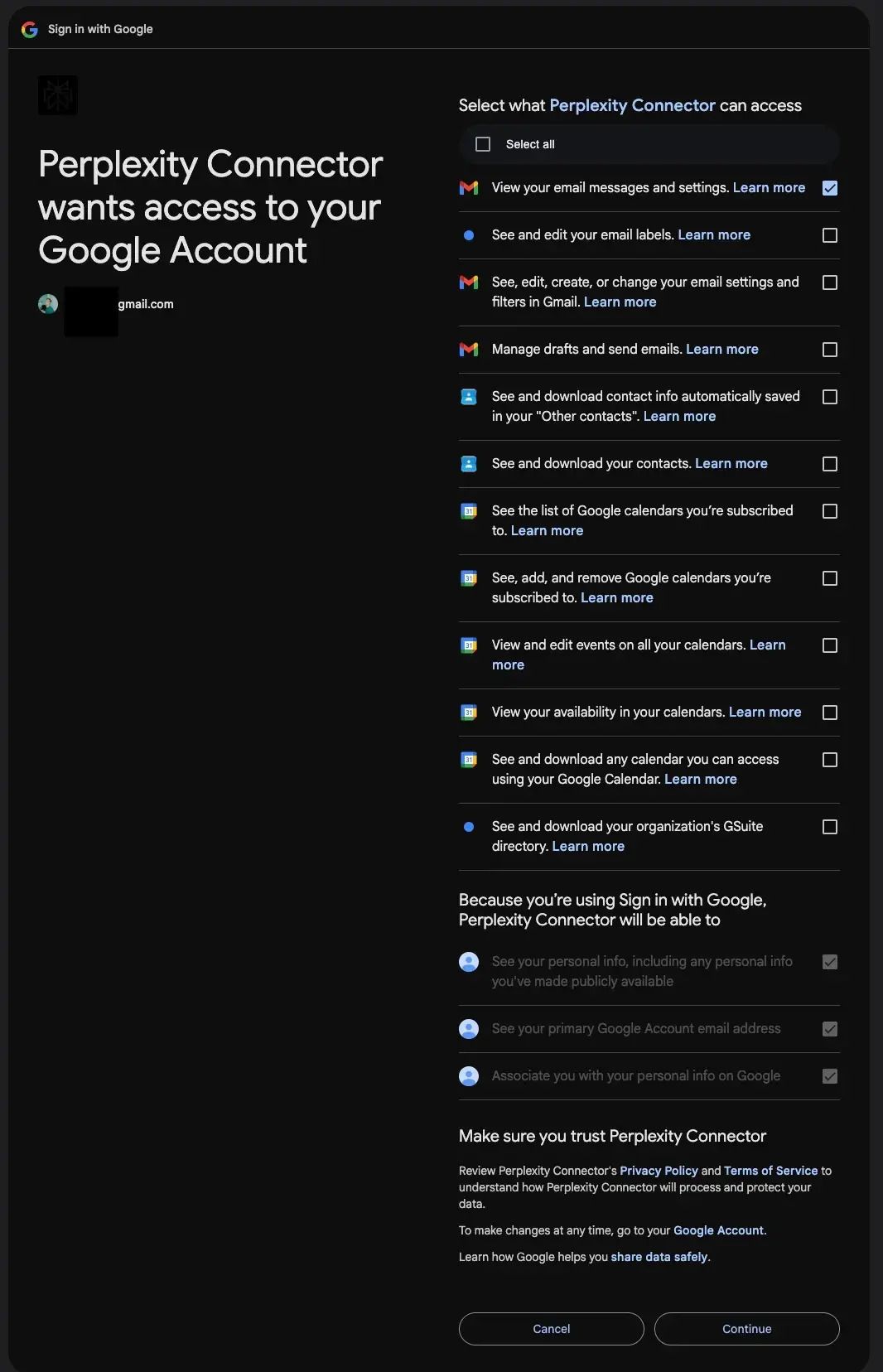

👀 Think twice before granting AI broad data access

The Story:

AI assistants, from browser extensions to meeting transcribers, are asking for sweeping permissions to your calendars, emails, contacts and files. Many users click “Allow” without realizing how much private data they hand over.

The details:

Overreaching calendar control: Some AI-powered browsers request rights to create, edit and delete events, send invites, read guest lists and even download entire corporate directories.

Deep inbox access: Meeting transcription services routinely ask to read, archive and forward every email in your inbox, not just the conversation they’re transcribing.

Full contact dumps: Several AI productivity tools seek permission to export all your contacts and company address books in bulk, exposing colleagues’ personal information.

Persistent data retention: There’s often no clear policy on how long AI vendors store your data or whether it’s used to train their models, creating unknown downstream risks.

Why it matters:

Granting AI apps carte blanche to personal or corporate data undermines every security best practice, from least-privilege access to data minimization. A single overbroad permission can open a backdoor into sensitive calendars, confidential emails, and private contacts, putting both personal privacy and business security at risk.

🎥 Netflix uses GenAI for the first time

The Story:

Netflix used generative AI for the first time on screen in its Argentine sci-fi series El Eternauta, employing AI to create a building-collapse visual effect rather than relying solely on traditional VFX.

The details:

Speed boost: The AI-generated sequence ran ten times faster than conventional methods.

Cost efficiency: AI made high-end effects feasible under a tighter budget, freeing resources for other production needs.

Creative workflow: Team used AI in previsualization and shot planning, giving directors new ways to explore scenes before filming.

Human collaboration: CEO Ted Sarandos emphasized that AI tools support artists rather than replace them, enhancing creative possibilities.

Why it matters:

AI-driven hiring platforms process vast amounts of personal data. A simple default password or forgotten test account can become a massive breach vector. Security teams must enforce strong authentication on every AI service, audit admin interfaces regularly and include third-party tools in routine risk assessments.

🏦 How can Banks deploy AI safely?

The Story:

On the 2nd of October, we’re hosting a free online event for CISOs, CTOs, Heads of AI and cybersecurity leaders in banking and financial services. We’ll show how companies can secure AI projects end-to-end while keeping development fast and flexible.

The details:

Real-world risk frameworks for banks: map where AI touches operations, customer systems and critical infrastructure.

Security solutions that work: semantic gateways, prompt filtering, data masking and continuous monitoring tuned for airline use cases.

Success stories from leading carriers: how they balanced rapid AI pilots with robust controls and compliance.

Live Q&A: send questions in advance or ask on the spot about your toughest AI-security challenges.

Why it matters:

Banks must adopt AI to improve efficiency and customer experience, but new tools can open fresh vulnerabilities. This session will arm you with proven patterns to secure AI pipelines, meet regulatory demands and keep innovation on track.

🎙️ Podcast recommendation of the week

Benjamin Mann co-founded Anthropic after departing OpenAI where he helped to build GPT-3. He left OpenAI along other security experts with the goal of putting alignment and safety at the center of AI development.

In this podcast episode, Ben shares why AI progress shows no sign of slowing and how we must guide it responsibly. Great insights and conversation with Lenny Rachitsky.

What´s next?

Thanks for reading! If this brought you value, share it with a colleague or post it to your feed. For more curated insight into the world of AI and security, stay connected.